HP + Galileo Partner to Accelerate Trustworthy AI

Posts tagged Large Language Models

Pinecone + Galileo = get the right context for your prompts

Galileo LLM Studio enables Pineonce users to identify and visualize the right context to add powered by evaluation metrics such as the hallucination score, so you can power your LLM apps with the right context while engineering your prompts, or for your LLMs in production

A Framework to Detect & Reduce LLM Hallucinations

Learn about how to identify and detect LLM hallucinations

Webinar - Deeplearning.ai + Galileo - Mitigating LLM Hallucinations

Join in on this workshop where we will showcase some powerful metrics to evaluate the quality of the inputs (data quality, RAG context quality, etc) and outputs (hallucinations) with a focus on both RAG and fine-tuning use cases.

Galileo x Zilliz: The Power of Vector Embeddings

Galileo x Zilliz: The Power of Vector Embeddings

5 Techniques for Detecting LLM Hallucinations

A survey of hallucination detection techniques

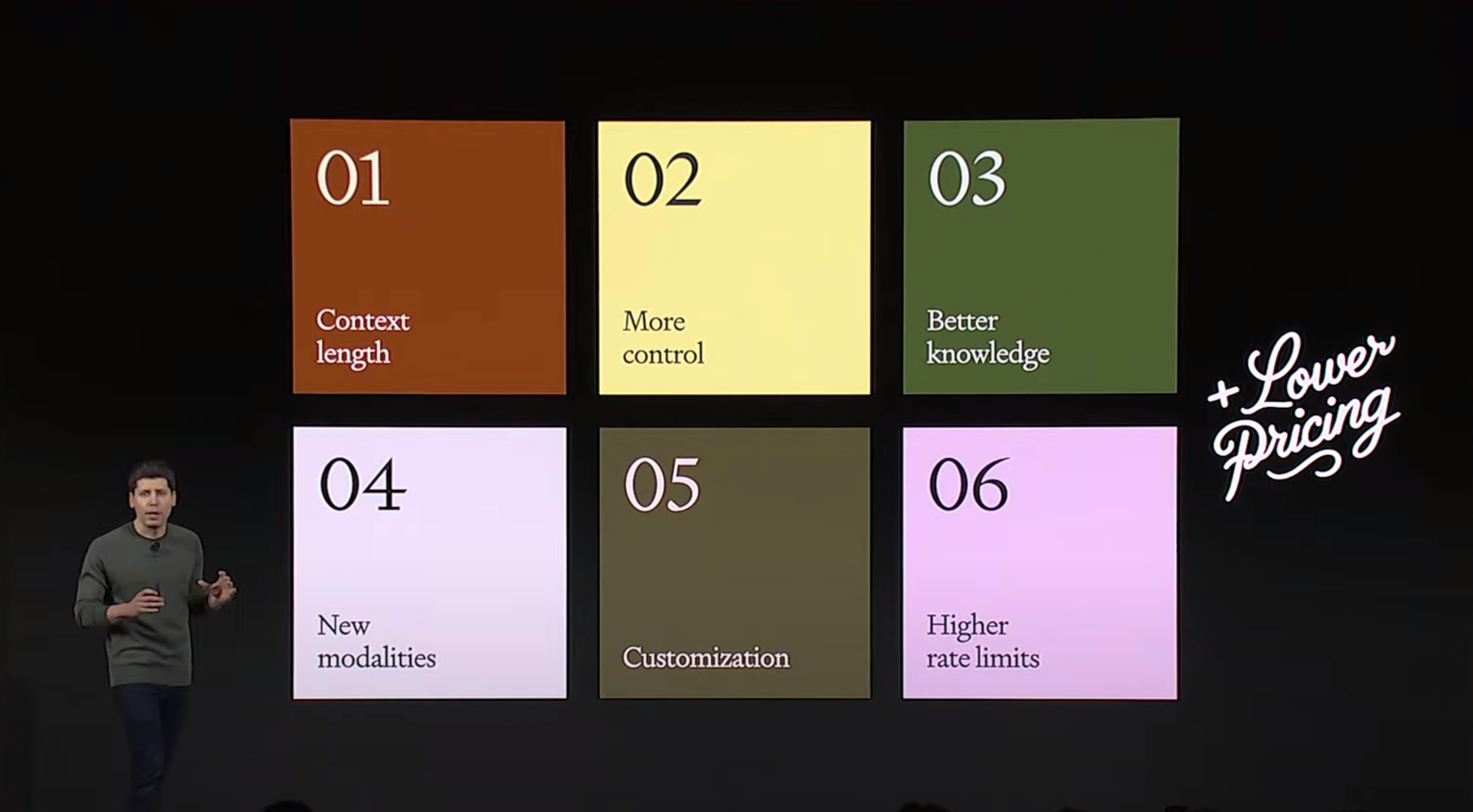

15 Key Takeaways From OpenAI Dev Day

Galileo's key takeaway's from the 2023 Open AI Dev Day, covering new product releases, upgrades, pricing changes and many more!

Galileo Luna: Breakthrough in LLM Evaluation, Beating GPT-3.5 and RAGAS

Research backed evaluation foundation models for enterprise scale

Galileo + Google Cloud: Evaluate and Observe Generative AI Applications Faster

Galileo on Google Cloud accelerates evaluating and observing generative AI applications.

Mastering RAG: How To Observe Your RAG Post-Deployment

Learn to setup a robust observability solution for RAG in production

Introducing the Hallucination Index

The Hallucination Index provides a comprehensive evaluation of 11 leading LLMs' propensity to hallucinate during common generative AI tasks.

Mastering RAG: LLM Prompting Techniques For Reducing Hallucinations

Dive into our blog for advanced strategies like ThoT, CoN, and CoVe to minimize hallucinations in RAG applications. Explore emotional prompts and ExpertPrompting to enhance LLM performance. Stay ahead in the dynamic RAG landscape with reliable insights for precise language models. Read now for a deep dive into refining LLMs.

Mastering RAG: How To Architect An Enterprise RAG System

Explore the nuances of crafting an Enterprise RAG System in our blog, "Mastering RAG: Architecting Success." We break down key components to provide users with a solid starting point, fostering clarity and understanding among RAG builders.

Mastering RAG: 8 Scenarios To Evaluate Before Going To Production

Learn how to Master RAG. Delve deep into 8 scenarios that are essential for testing before going to production.

A Metrics-First Approach to LLM Evaluation

Learn about different types of LLM evaluation metrics needed for generative applications

Generative AI and LLM Insights: April 2024

Smaller LLMs can be better (if they have a good education), but if you’re trying to build AGI you better go big on infrastructure! Check out our roundup of the top generative AI and LLM articles for April 2024.

Mastering RAG: Choosing the Perfect Vector Database

Master the art of selecting vector database based on various factors

Generative AI and LLM Insights: March 2024

Stay ahead of the AI curve! Our February roundup covers: Air Canada's AI woes, RAG failures, climate tech & AI, fine-tuning LLMs, and synthetic data generation. Don't miss out!

Generative AI and LLM Insights: May 2024

The AI landscape is exploding in size, with some early winners emerging, but RAG reigns supreme for enterprise LLM systems. Check out our roundup of the top generative AI and LLM articles for May 2024.

Announcing LLM Studio: A Smarter Way to Build LLM Applications

LLM Studio helps you develop and evaluate LLM apps in hours instead of days.

Understanding LLM Hallucinations Across Generative Tasks

The creation of human-like text with Natural Language Generation (NLG) has improved recently because of advancements in Transformer-based language models. This has made the text produced by NLG helpful for creating summaries, generating dialogue, or transforming data into text. However, there is a problem: these deep learning systems sometimes make up or "hallucinate" text that was not intended, which can lead to worse performance and disappoint users in real-world situations.

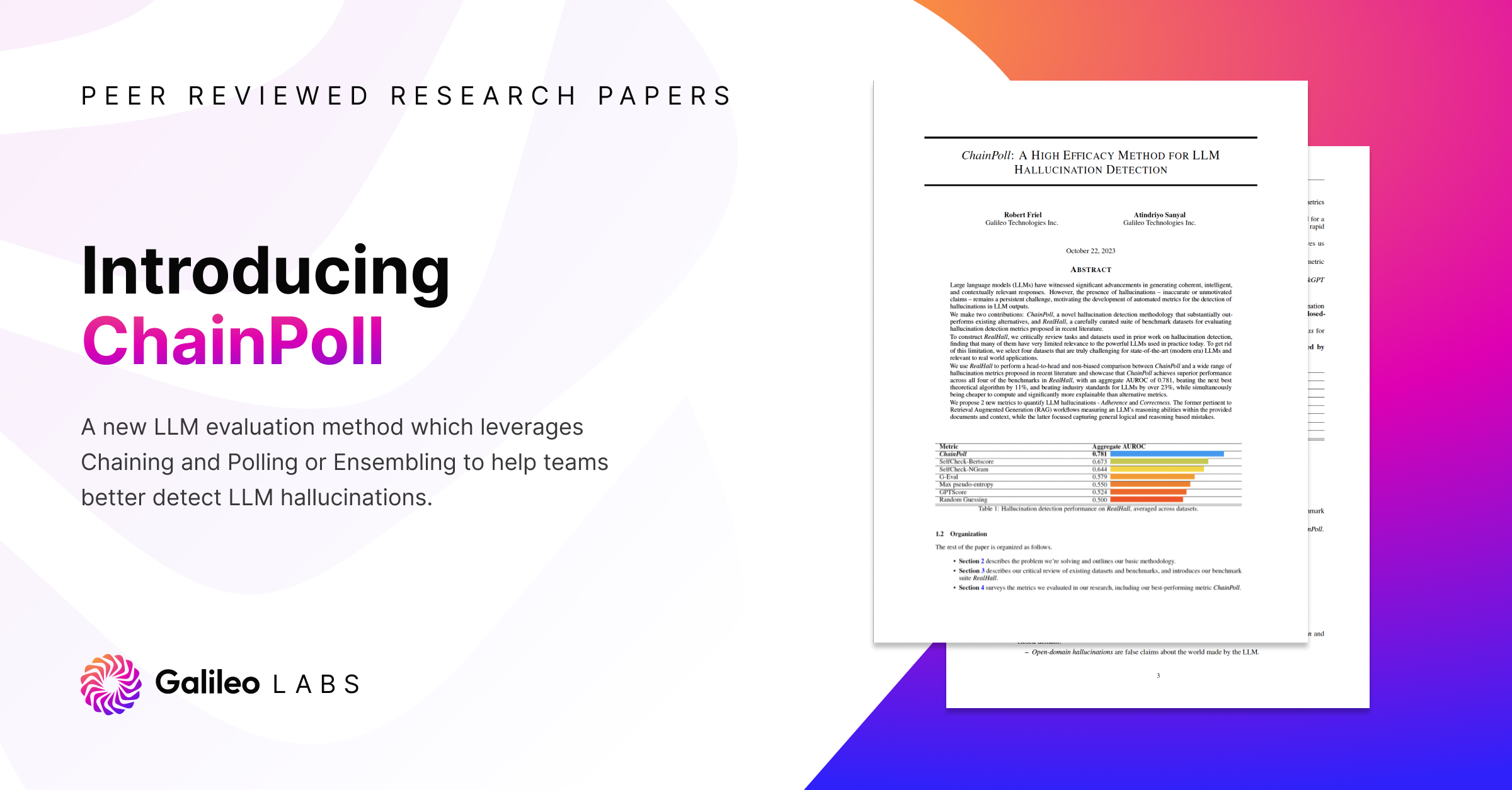

Paper - Galileo ChainPoll: A High Efficacy Method for LLM Hallucination Detection

ChainPoll: A High Efficacy Method for LLM Hallucination Detection. ChainPoll leverages Chaining and Polling or Ensembling to help teams better detect LLM hallucinations. Read more at rungalileo.io/blog/chainpoll.

5 Key Takeaways From President Biden’s Executive Order For Trustworthy AI

Explore the transformative impact of President Biden's Executive Order on AI, focusing on safety, privacy, and innovation. Discover key takeaways, including the need for robust Red-teaming processes, transparent safety test sharing, and privacy-preserving techniques.

Generative AI and LLM Insights: February 2024

February's AI roundup: Pinterest's ML evolution, NeurIPS 2023 insights, understanding LLM self-attention, cost-effective multi-model alternatives, essential LLM courses, and a safety-focused open dataset catalog. Stay informed in the world of Gen AI!

RAG Vs Fine-Tuning Vs Both: A Guide For Optimizing LLM Performance

A comprehensive guide to retrieval-augmented generation (RAG), fine-tuning, and their combined strategies in Large Language Models (LLMs).

Is Llama 3 better than GPT4?

Llama 3 insights from the leaderboards and experts

Survey of Hallucinations in Multimodal Models

An exploration of type of hallucinations in multimodal models and ways to mitigate them.

Solving Challenges in GenAI Evaluation – Cost, Latency, and Accuracy

Learn to do robust evaluation and beat the current SoTA approaches

Working with Natural Language Processing?

Read about Galileo’s NLP Studio