HP + Galileo Partner to Accelerate Trustworthy AI

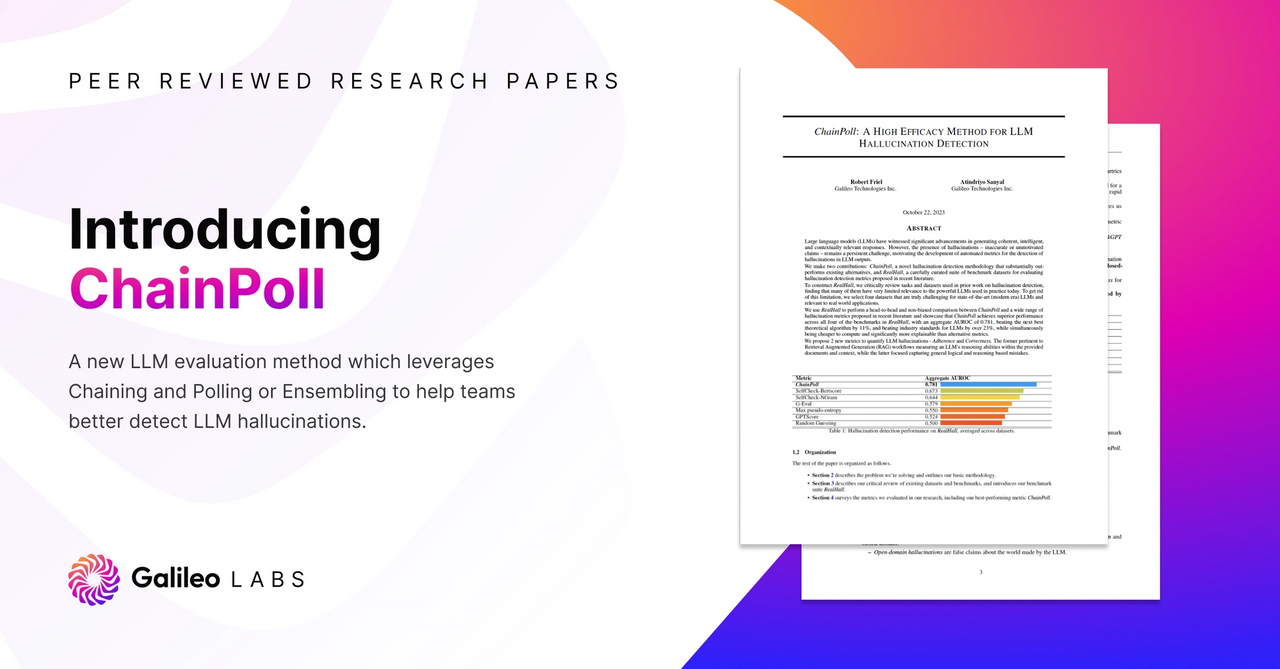

Paper - Galileo ChainPoll: A High Efficacy Method for LLM Hallucination Detection

Abstract:

Large language models (LLMs) have witnessed significant advancements in generating coherent, intelligent, and contextually relevant responses. However, the presence of hallucinations – inaccurate or unmotivated claims – remains a persistent challenge, motivating the development of automated metrics for the detection of hallucinations in LLM outputs.

We make two contributions: ChainPoll, a novel hallucination detection methodology that substantially outperforms existing alternatives, and RealHall, a carefully curated suite of benchmark datasets for evaluating hallucination detection metrics proposed in recent literature.

To construct RealHall, we critically review tasks and datasets used in prior work on hallucination detection, finding that many of them have very limited relevance to the powerful LLMs used in practice today. To get rid of this limitation, we select four datasets that are truly challenging for state-of-the-art (modern era) LLMs and relevant to real-world applications.

We use RealHall to perform a head-to-head and non-biased comparison between ChainPoll and a wide range of hallucination metrics proposed in recent literature and showcase that ChainPoll achieves superior performance across all four of the benchmarks in RealHall, with an aggregate AUROC of 0.781, beating the next best theoretical algorithm by 11%, and beating industry standards for LLMs by over 23%, while simultaneously being cheaper to compute and significantly more explainable than alternative metrics.

We propose 2 new metrics to quantify LLM hallucinations - Adherence and Correctness. The former pertinent to Retrieval Augmented Generation (RAG) workflows measuring an LLM’s reasoning abilities within the provided documents and context, while the latter focused capturing general logical and reasoning based mistakes.

To read the rest of this paper, click here.

Working with Natural Language Processing?

Read about Galileo’s NLP Studio