All New: Evaluations for RAG & Chain applications

Generative AI and LLM Insights: March 2024

Table of contents

- Air Canada loses court case after its chatbot hallucinated fake policies to a customer

- 7 common failure points in RAG systems

- How climate tech startups use generative AI to address the climate crisis

- How to Fine-Tune LLMs in 2024

- The Unhyped Importance of Capable AI

- How to Generate and Use Synthetic Data for Fine-tuning

Fine-tuning and RAG help get the most out of LLMs (using synthetic data or otherwise), but even then you may be legally liable for hallucinations. Check out our roundup of the top generative AI and LLM articles for March 2024!

Air Canada loses court case after its chatbot hallucinated fake policies to a customer

In a first, an LLM hallucination has caused Air Canada to lose in court. Companies are liable for what their chat apps generate, making LLM evaluation and observability more important than ever: https://mashable.com/article/air-canada-forced-to-refund-after-chatbot-misinformation

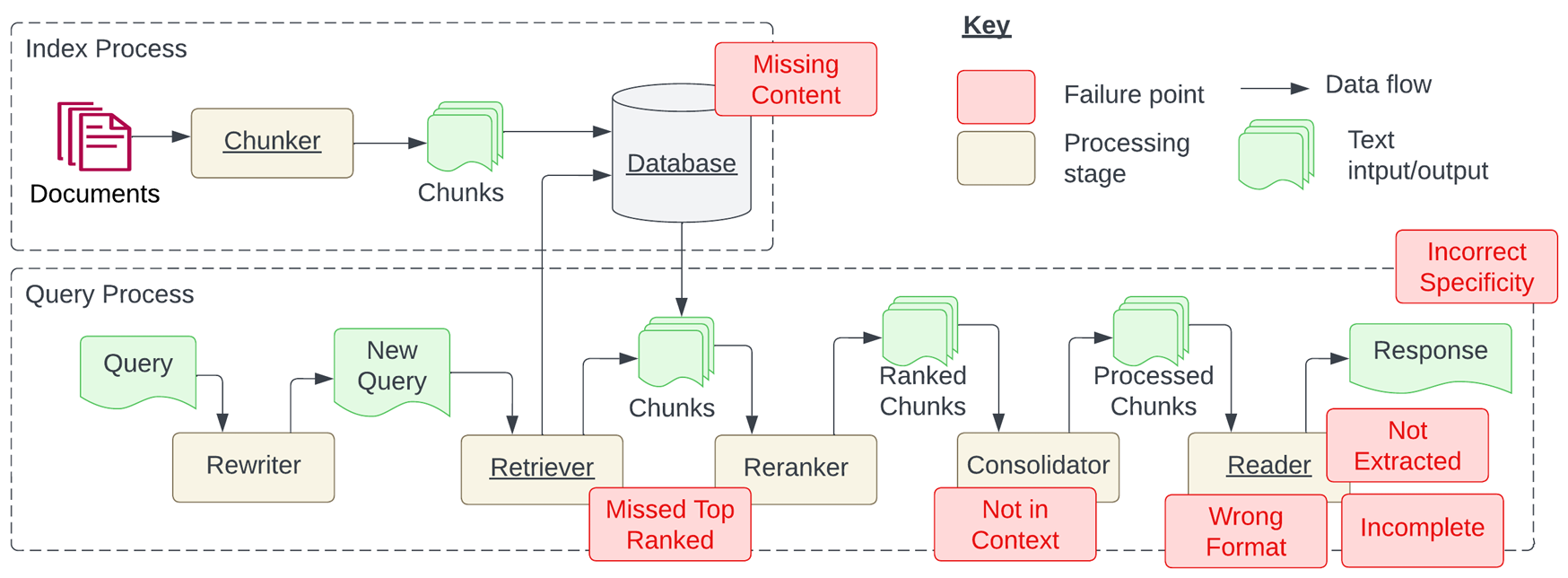

7 common failure points in RAG systems

From mis-ranked documents to extraction failures, researchers have identified what causes issues in RAG systems and lessons learned: https://www.latent.space/p/neurips-2023-papers

How climate tech startups use generative AI to address the climate crisis

Climate change affects us all. Here are a few climate tech startups using GenAI to help stop the climate crisis: https://aws.amazon.com/blogs/startups/how-climate-tech-startups-use-generative-ai-to-address-the-climate-crisis

How to Fine-Tune LLMs in 2024

There's a whole buffet of LLMs available for use, but to get real value out of them AI teams need to fine-tune models on their own data. Here’s a great guide on how to get it done: https://www.philschmid.de/fine-tune-llms-in-2024-with-trl

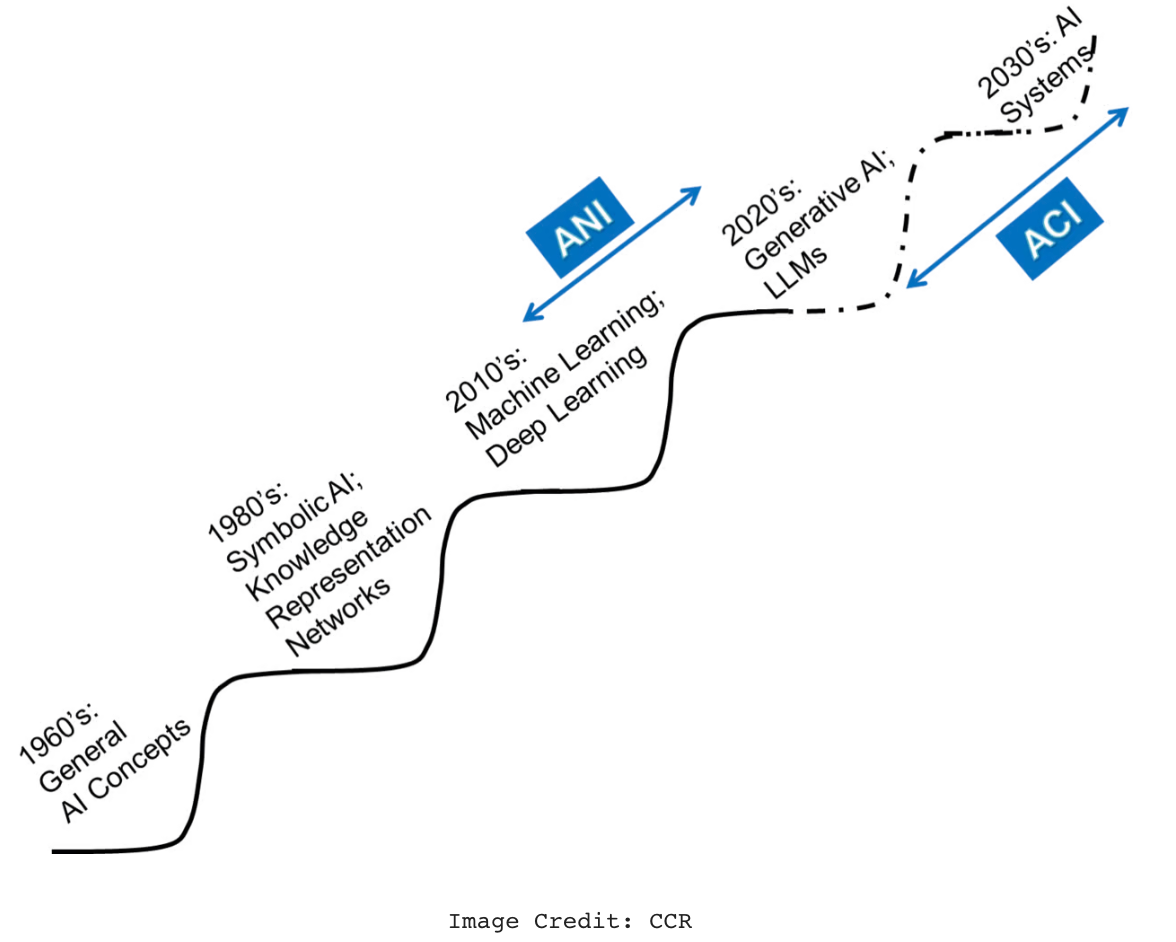

The Unhyped Importance of Capable AI

There's much hype (and concern) about AGI and superintelligence. But let's not disregard our current drive towards "capable" AI. Learn why this un-hyped phase deserves more respect and attention: https://www.turingpost.com/p/capableai

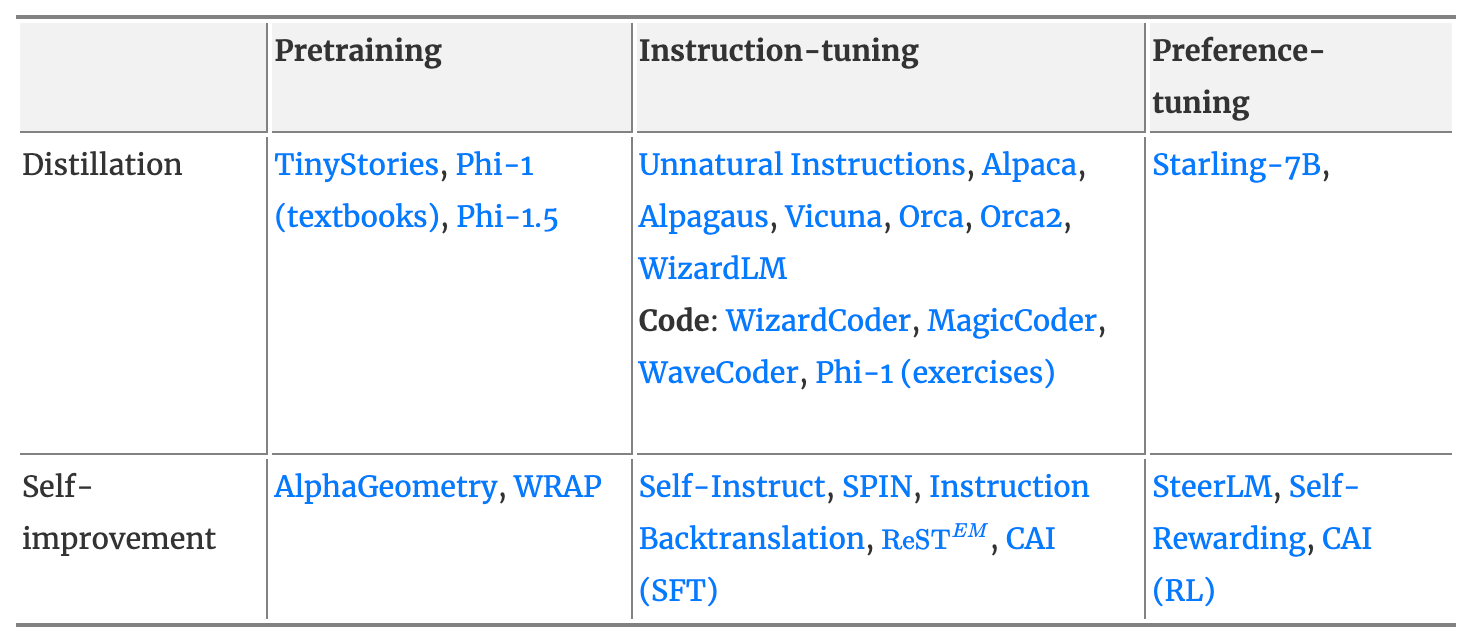

How to Generate and Use Synthetic Data for Fine-tuning

Synthetic data is increasingly viable for pretraining, instruction-tuning, and preference-tuning. Do you know the two main approaches – distillation and self-improvement – for this cheaper, faster alternative to human annotation? https://eugeneyan.com/writing/synthetic

Table of contents

- Air Canada loses court case after its chatbot hallucinated fake policies to a customer

- 7 common failure points in RAG systems

- How climate tech startups use generative AI to address the climate crisis

- How to Fine-Tune LLMs in 2024

- The Unhyped Importance of Capable AI

- How to Generate and Use Synthetic Data for Fine-tuning

Subscribe to Newsletter

Working with Natural Language Processing?

Read about Galileo’s NLP Studio