HP + Galileo Partner to Accelerate Trustworthy AI

Posts tagged Hallucinations

Mastering RAG: Improve RAG Performance With 4 Powerful RAG Metrics

Unlock the potential of RAG analysis with 4 essential metrics to enhance performance and decision-making. Learn how to master RAG methodology for greater effectiveness in project management and strategic planning.

A Framework to Detect & Reduce LLM Hallucinations

Learn about how to identify and detect LLM hallucinations

Webinar - Deeplearning.ai + Galileo - Mitigating LLM Hallucinations

Join in on this workshop where we will showcase some powerful metrics to evaluate the quality of the inputs (data quality, RAG context quality, etc) and outputs (hallucinations) with a focus on both RAG and fine-tuning use cases.

Webinar - Announcing Galileo LLM Studio: A Smarter Way to Build LLM Applications

Webinar - Announcing Galileo LLM Studio: A Smarter Way to Build LLM Applications

5 Techniques for Detecting LLM Hallucinations

A survey of hallucination detection techniques

Galileo Luna: Breakthrough in LLM Evaluation, Beating GPT-3.5 and RAGAS

Research backed evaluation foundation models for enterprise scale

Mastering RAG: Advanced Chunking Techniques for LLM Applications

Learn advanced chunking techniques tailored for Language Model (LLM) applications with our guide on Mastering RAG. Elevate your projects by mastering efficient chunking methods to enhance information processing and generation capabilities.

Introducing the Hallucination Index

The Hallucination Index provides a comprehensive evaluation of 11 leading LLMs' propensity to hallucinate during common generative AI tasks.

Mastering RAG: LLM Prompting Techniques For Reducing Hallucinations

Dive into our blog for advanced strategies like ThoT, CoN, and CoVe to minimize hallucinations in RAG applications. Explore emotional prompts and ExpertPrompting to enhance LLM performance. Stay ahead in the dynamic RAG landscape with reliable insights for precise language models. Read now for a deep dive into refining LLMs.

Mastering RAG: 8 Scenarios To Evaluate Before Going To Production

Learn how to Master RAG. Delve deep into 8 scenarios that are essential for testing before going to production.

A Metrics-First Approach to LLM Evaluation

Learn about different types of LLM evaluation metrics needed for generative applications

Webinar – Galileo Protect: Real-Time Hallucination Firewall

We’re excited to announce Galileo Protect – an advanced GenAI firewall that intercepts hallucinations, prompt attacks, security threats, and more in real-time! Register for our upcoming webinar to see Protect live in action.

Introducing Galileo Protect: Your Real-Time Hallucination Firewall

We're thrilled to unveil Galileo Protect, an advanced GenAI firewall solution that intercepts hallucinations, prompt attacks, security threats, and more in real-time.

Understanding LLM Hallucinations Across Generative Tasks

The creation of human-like text with Natural Language Generation (NLG) has improved recently because of advancements in Transformer-based language models. This has made the text produced by NLG helpful for creating summaries, generating dialogue, or transforming data into text. However, there is a problem: these deep learning systems sometimes make up or "hallucinate" text that was not intended, which can lead to worse performance and disappoint users in real-world situations.

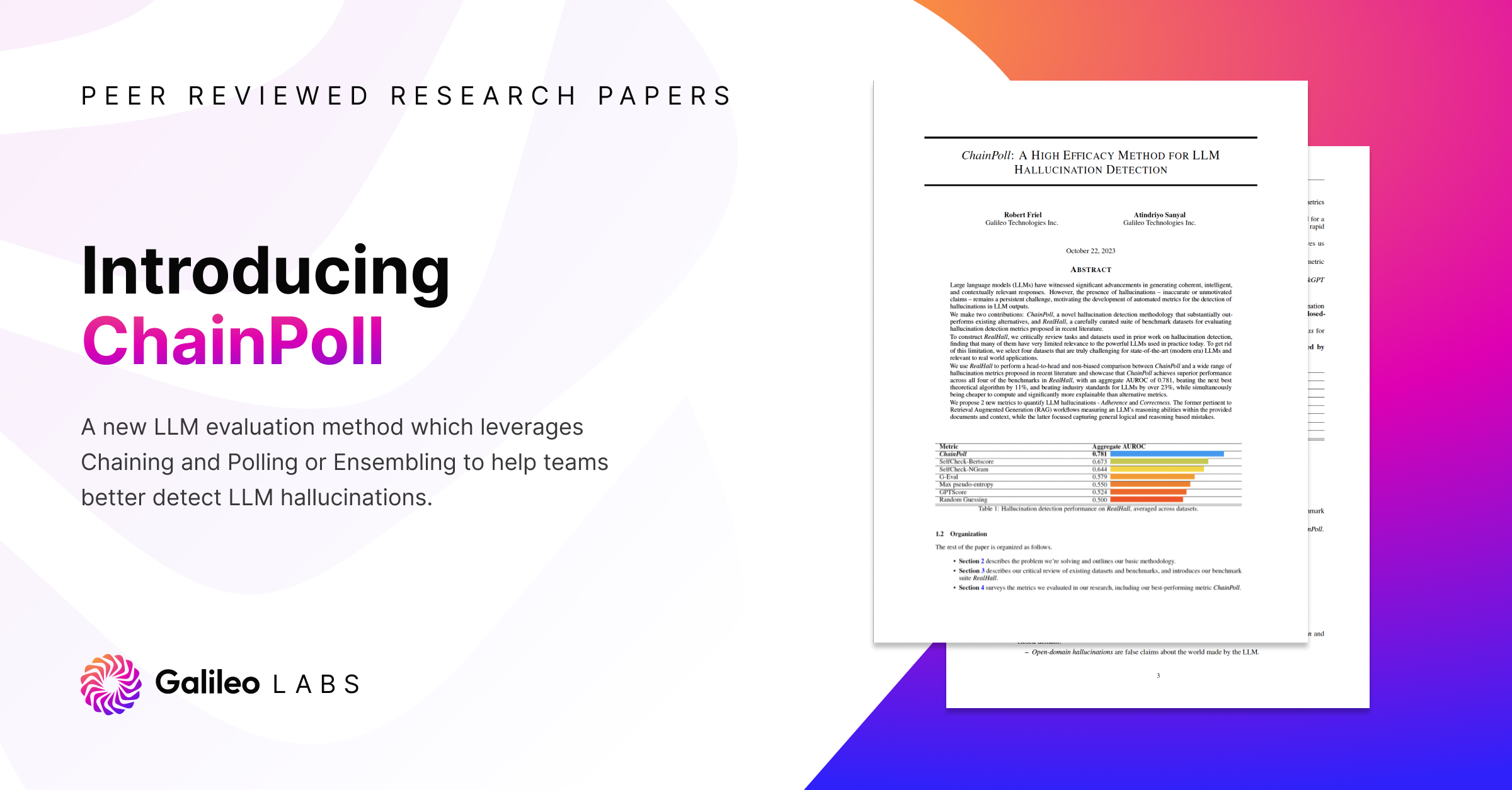

Paper - Galileo ChainPoll: A High Efficacy Method for LLM Hallucination Detection

ChainPoll: A High Efficacy Method for LLM Hallucination Detection. ChainPoll leverages Chaining and Polling or Ensembling to help teams better detect LLM hallucinations. Read more at rungalileo.io/blog/chainpoll.

5 Key Takeaways From President Biden’s Executive Order For Trustworthy AI

Explore the transformative impact of President Biden's Executive Order on AI, focusing on safety, privacy, and innovation. Discover key takeaways, including the need for robust Red-teaming processes, transparent safety test sharing, and privacy-preserving techniques.

Webinar - Fix Hallucinations in RAG Systems with Pinecone and Galileo

Watch our webinar with Pinecone on optimizing RAG & chain-based GenAI! Learn strategies to combat hallucinations, leverage vector databases, and enhance RAG analytics for efficient debugging.

RAG Vs Fine-Tuning Vs Both: A Guide For Optimizing LLM Performance

A comprehensive guide to retrieval-augmented generation (RAG), fine-tuning, and their combined strategies in Large Language Models (LLMs).

Survey of Hallucinations in Multimodal Models

An exploration of type of hallucinations in multimodal models and ways to mitigate them.

Working with Natural Language Processing?

Read about Galileo’s NLP Studio