Introducing Galileo Protect: Real-Time Hallucination Firewall 🛡️

Enough Strategy, Let's Build: How to Productionize GenAI

Table of contents

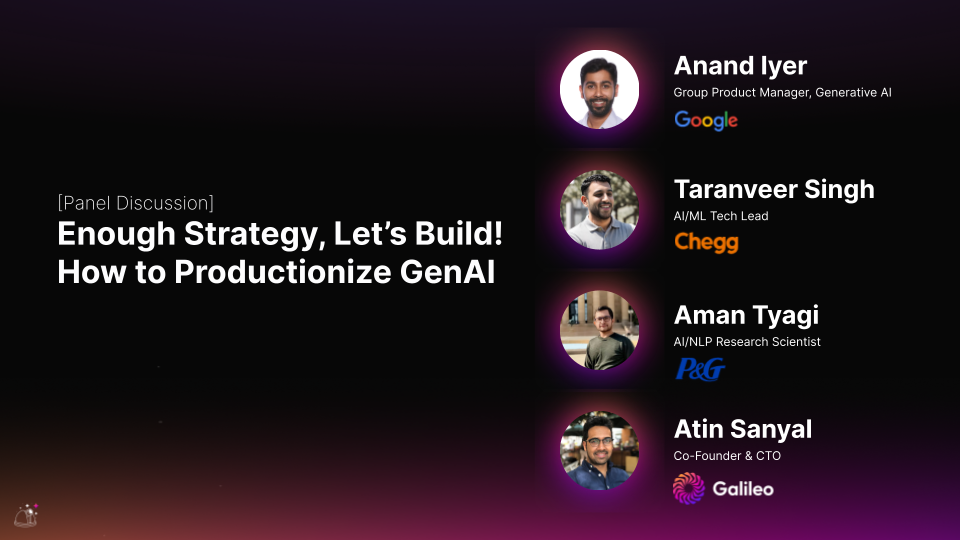

It’s time to get building! At GenAI Productionize 2024 expert practitioners covered the generative AI lifecycle and best practices for deploying genAI at enterprise scale, relying on their own experiences and mistakes to offer tools and techniques for success. Read key takeaways from the session (or better yet, watch the entire session below) featuring Anand Iyer, Group Product Manager at Google Cloud Generative AI, Taranveer Singh, AI Tech Lead at Chegg, and Aman Tyagi, Senior AI Research Scientist at Procter & Gamble.

But above all, remember… eyeballing is not evaluation!

Learn By Doing

Before you can start deploying generative AI, you need to build the right skills. Our panelists recommend focusing on practical skills and tools by learning how to interact with and utilize LLMs in real-world applications, instead of worrying about the inner workings of transformer architecture or technical details behind the scenes. This hands-on experience, for example with chatbots, will help hone your prompting techniques and give insight into model responses. It’s crucial to be able to prompt effectively and provide the right context to the LLM to get the job done, most often through a RAG system, before you can productionize applications. Play with tools and frameworks like LangChain, Semantic Kernel, and AutoGen to start building simple agent-based applications and progress your skills.

Automation and Collaboration

Having the right infrastructure and culture in place is essential for launching genAI initiatives. Invest in MLOps tooling and automation to help scale your work, including robust infrastructure for model hosting, prompt storage, annotations, and LLM evaluation. But technology is only one piece of the puzzle; cross-disciplinary collaboration is the secret to success. Blend ML specialists, engineers, and product teams to quickly prototype solutions and overcome common challenges around cost, latency, and throughput.

Start Small, Stay Safe

Don’t try to launch a do-all genAI application; instead, start with a limited scope based on a clearly defined user journey with concrete ROI measures. If you can’t measure the ROI of your project, the scope is too wide and needs to be reduced to a smaller use case. If you can’t evaluate the effectiveness of your genAI solution, the scope is again too broad. Safety and security need to stay top-of-mind regardless of your use case. Test your solution in a structured, intentional way using well-defined datasets before releasing it into the wild, and employ privacy-by-design practices from the development stage. Mitigate possible negative outputs or impacts by monitoring bias, fairness, toxicity, and other safety measures. If necessary, keep a human in the loop to ensure outputs are appropriate before they reach end users. Observe your genAI application in pre- and post-production to catch hallucinations or personally identifiable information, and dig into root causes.

This is just the tip of the iceberg! Watch the entire session for a deeper understanding of how to get started building your own generative AI applications.

Table of contents

Subscribe to Newsletter

Working with Natural Language Processing?

Read about Galileo’s NLP Studio