HP + Galileo Partner to Accelerate Trustworthy AI

Ready for Regulation: Preparing for the EU AI Act

Introduction

The EU AI Act, originally drafted in 2021, is close to being ratified by member countries and will soon impact businesses operating in the EU. This groundbreaking legislation establishes a risk-based tiered system that discerns between high-risk and general-purpose AI systems, providing clear directives for achieving compliance. Failing to comply with the act would force companies to steer clear of the region or restrict access to products — something firms grappled previously with the Digital Services Act and the Digital Markets Act. Time is of the essence for AI companies to prepare for the requirements. Is your team ready?

In this blog post, we'll delve into the details of the act, its prohibitions, requirements for high-risk AI companies, consequences of non-compliance, and how companies can meet the stringent requirements set forth by the EU AI Act, including key steps teams can take today.

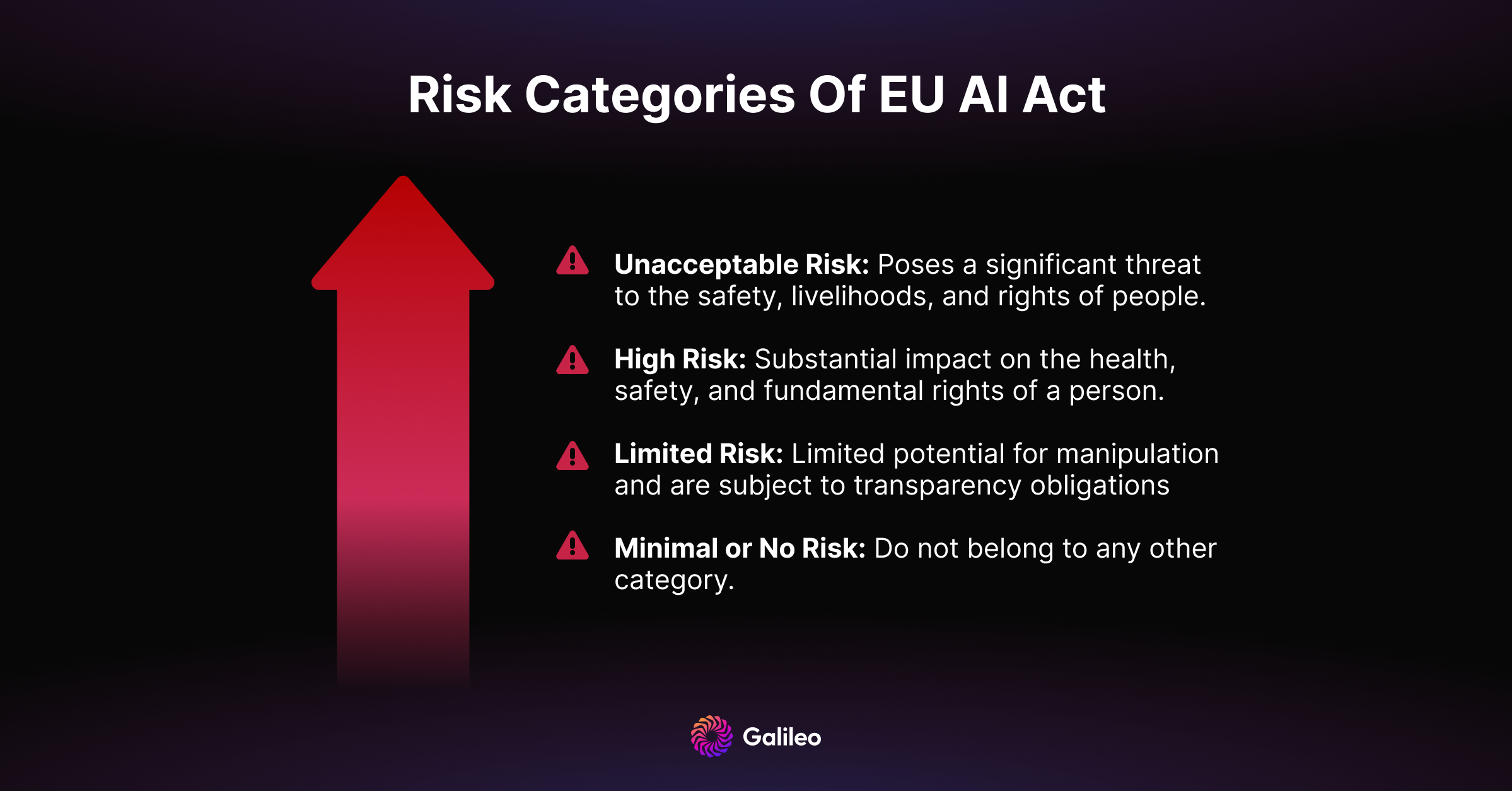

Risk Categories

The EU AI Act categorizes AI systems into four risk categories, which determine the level of regulation and oversight required.

1. Unacceptable Risk

AI systems that pose a significant threat to the safety, livelihoods, and rights of people will be prohibited.

Examples include:

- Cognitive behavioral manipulation of individuals, like voice-activated toys that promote hazardous behavior in children.

- Social scoring and classification of individuals based on their behavior, socio-economic status, or personal characteristics.

- Biometric identification and categorization of individuals, such as facial recognition in publicly accessible spaces by law enforcement authorities unless necessary.

2. High Risk

AI systems that can have a substantial impact on the health, safety, and fundamental rights of a person. They will be subject to strict obligations before they can be put on the market, including adequate risk assessment and mitigation systems.

Examples include:

- Safety components in the management and operation of critical digital infrastructure, road traffic and the supply of water, gas, heating and electricity.

- Education and vocational training systems used to determine access to institutions or programs at all levels

- Employment or workers management systems used for recruitment, selection, promotion, or termination of work

- Essential private or public services and benefits, such as public assistance benefits, credit scoring, emergency services, and insurance risk assessments

- Law enforcement systems used to detect the emotional state of a person, determine risks of offending or reoffending, evaluate evidence in an investigation, predict potential criminal offenses, or profile persons

- Migration, asylum and border control management systems used to detect the emotional state of a person, assess the risks posed by a person, or examine applications for asylum, visa or residence permits

- Systems used to administer justice and democratic processes, including interpreting facts or the law

3. Limited Risk

AI systems with limited potential for manipulation are only subject to transparency obligations such as:

- Systems interacting with natural persons must clearly inform them of their AI nature, unless it is evident to a reasonably well-informed, observant, and circumspect person considering the circumstances.

- Systems generating or manipulating image, audio, or video content resembling real entities must disclose if the content is artificially created or manipulated to prevent deceptive appearances ('deep fakes').

Examples include:

- Systems that interacts with humans (e.g. chatbots)

- Emotion recognition systems

- Generative AI which can manipulate image, audio or video content (e.g. deepfakes)

4. Minimal or No Risk

AI systems that do not belong in any other category are considered to pose minimal or no risk. They will be subject to the least stringent regulations.

Examples include:

- AI-enabled video games using AI to enhance gameplay, such as by adapting the game's difficulty based on the player's skill level

- Inventory-management systems optimizing inventory levels, streamlining supply chains, or improving logistics

- Market segmentation systems analyzing customer data to create targeted marketing campaigns or improve customer experiences

This risk-based approach aims to ensure that AI systems are safe and respect the fundamental rights of individuals, while promoting innovation in the AI sector.

Conformity Assessment for High Risk AI

Being classified as "high risk" under the EU AI Act has several implications for companies and AI teams. Article 43 outlines two procedures for conformity assessment that AI providers must choose from under the EU AI Act.

1. Conformity assessment based on internal control (Annex VI):

- Verification of the established quality management system's compliance with requirements.

- Examination of technical documentation to assess AI system compliance with essential requirements.

- Verification that the design and development process and post-market monitoring aligns with technical documentation.

2. Conformity assessment based on quality management system and technical documentation assessment with the involvement of a notified body (Annex VII):

- Examination of the approved quality management system for AI system design, development, and testing by a notified body.

- Surveillance of the quality management system by a notified body.

- Assessment of technical documentation by a notified body, including access to datasets.

The Quality Management System Assessment of act contain the following components:

Regulatory compliance strategy

The quality management system must incorporate a strategy for regulatory compliance, encompassing adherence to conformity assessment procedures and management procedures for modifications to the high-risk AI system.

Design control and verification

Techniques, procedures, and systematic actions for the design, design control, and design verification of the high-risk AI system must be clearly articulated within the quality management system.

Development, quality control, and assurance

The system should define techniques, procedures, and systematic actions governing the development, quality control, and quality assurance of the high-risk AI system.

Examination, test, and validation procedures

The quality management system must outline examination, test, and validation procedures to be conducted before, during, and after the development of the high-risk AI system, specifying the frequency of these processes.

Technical specifications and standards

Technical specifications, including standards, are to be identified, and if relevant harmonized standards are not fully applied, the means to ensure compliance with the requirements should be detailed.

Data management systems and procedures

Robust systems and procedures for data management, covering data collection, analysis, labeling, storage, filtration, mining, aggregation, retention, and any other data-related operations preceding the market placement or service initiation of high-risk AI systems, are integral to the quality management system.

Risk management system

The risk management system outlined in Article 9 must be incorporated within the quality management framework.

Post-market monitoring

Procedures for the establishment, implementation, and maintenance of a post-market monitoring system, as per Article 61, are essential components of the quality management system.

Reporting of serious incidents

Provisions for procedures related to the reporting of a serious incident in compliance with Article 62 must be clearly defined.

Communication protocols

Guidelines for communication with national competent authorities, sectoral competent authorities, notified bodies, other operators, customers, or other interested parties should be established within the quality management system.

Record keeping

Systems and procedures for comprehensive record-keeping of all relevant documentation and information are imperative.

Resource management

The quality management system must address resource management, including measures ensuring the security of supply.

Accountability framework

An accountability framework delineating the responsibilities of management and other staff concerning all aspects specified in this paragraph is a fundamental requirement within the quality management system.

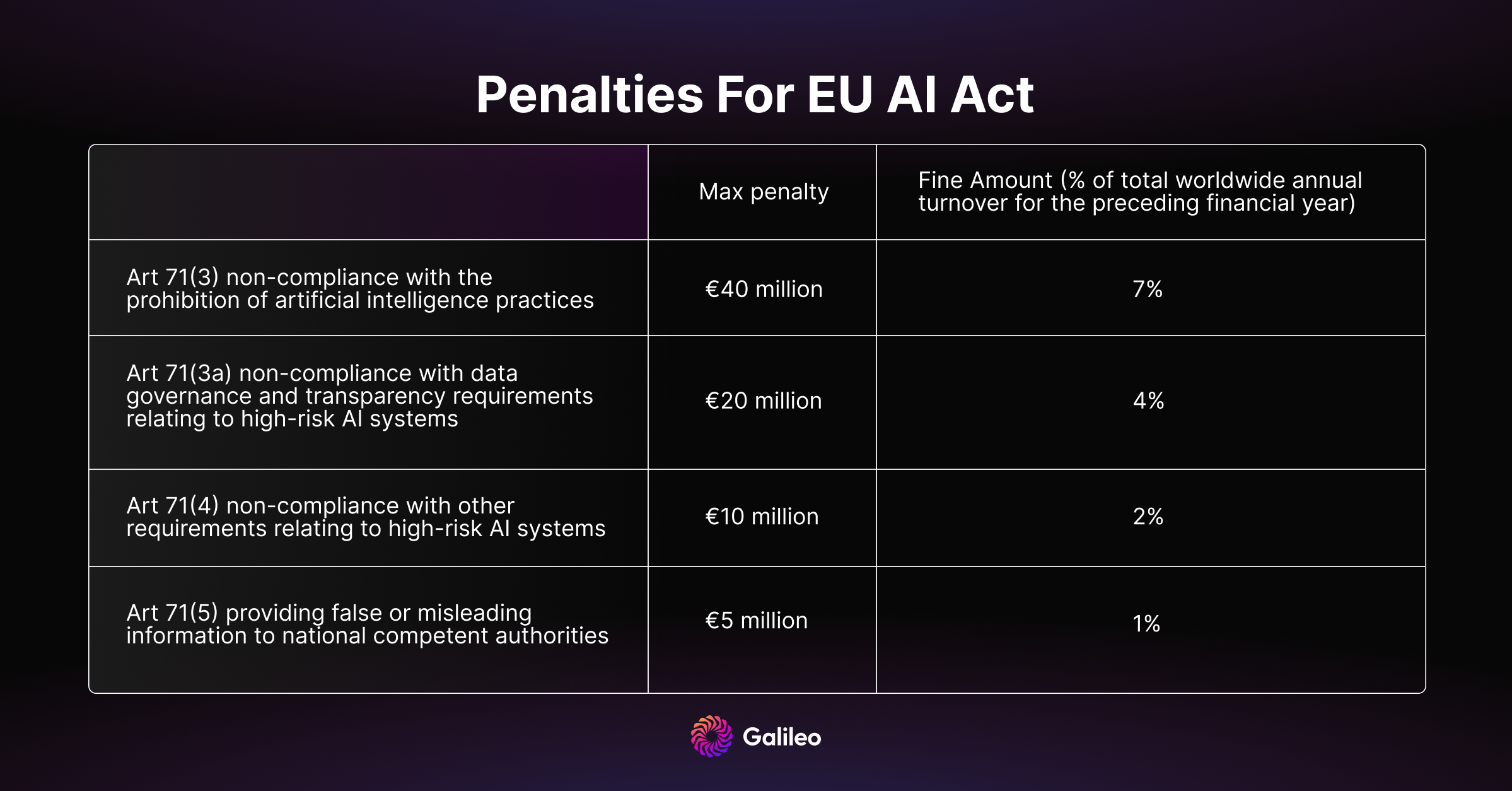

Consequences of Non-Compliance

Non-compliance with the EU AI Act carries hefty penalties depending on the infringement's severity and the company's size.

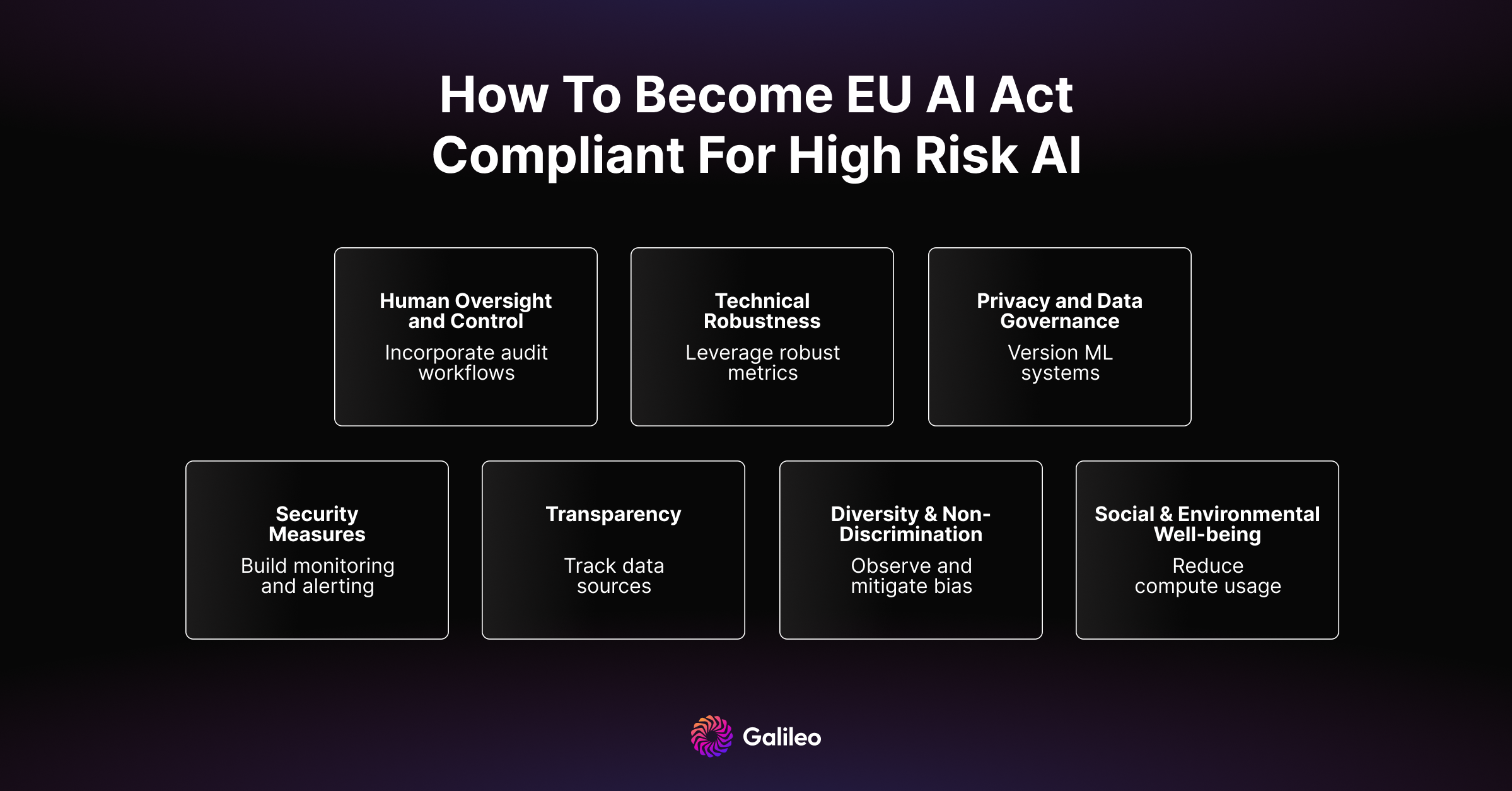

How to Become EU AI Act Compliant

While the EU AI Act outlines specific requirements for compliance, practical implementation often requires integrating advanced tools and implementing internal processes. Companies should consider the following aspects:

Human oversight and control

- Establish systematic and auditable platforms for human evaluation of AI system deployment.

- Document explicit approvals for production rollout to enhance accountability and transparency.

- Provide internal teams with visibility into model inputs and outputs, including explanations for outputs.

- Flag instances of low model confidence for human review.

- Integrate an override mechanism for human reviewers in ambiguous or sensitive situations.

- Include mechanisms for users to flag and review ambiguous, sensitive, or potentially harmful outputs.

Technical robustness

- Utilize robust metrics for detecting hallucinations and accurate system outputs.

- Conduct thorough testing of high-risk AI systems in real-world conditions at various development stages.

- Implement technical solutions to prevent and control attacks such as data poisoning or adversarial examples.

Privacy and data governance

- Systematically track ML versions and make data auditable with registered compliance checks.

- Utilize access controls and data tracking mechanisms for privacy maintenance.

- Collect and process only necessary data, minimizing privacy risks.

- Implement clear and transparent consent mechanisms for data collection and processing.

- Maintain comprehensive documentation of data sources, processing steps, and model development.

Security measures

- Implement mechanisms for detecting prompt injections and monitoring unauthorized modifications.

- Utilize encryption techniques for data protection in transit and at rest.

- Restrict access to sensitive data to authorized personnel only.

- Ensure robustness against adversarial attacks and unexpected inputs.

- Evaluate third-party services for adherence to security standards and conduct security assessments.

Transparency

- Use tools providing traceability of data sources for compliance and transparency.

- Document language models in detail, including architecture, training data, and key design choices.

- Foster open communication with stakeholders, users, and the broader community.

- Create and share model cards containing information about behavior, performance, limitations, biases, and ethical considerations.

Diversity & non-discrimination

- Ensure that training data is diverse and representative of various demographics, including different genders, ethnicities, ages, and cultural backgrounds. This helps the model to be more inclusive and less likely to exhibit biased behavior.

- Regularly assess the model's outputs for potential biases and take corrective actions as needed. This includes addressing biases related to gender, ethnicity, and other protected characteristics.

- Establish clear ethical guidelines and policies for the development. These guidelines should explicitly state a commitment to diversity, non-discrimination, and fairness in AI applications.

- Provide users with controls to influence or adjust the behavior of the language model. This may include settings to customize the model's sensitivity to certain topics or preferences, allowing users to have a more personalized experience.

- Conduct regular audits and assessments of the language model's performance with respect to diversity and non-discrimination. This includes evaluating its outputs, identifying potential issues, and implementing improvements.

Social & environmental well-being

- Implement tools that track compute usage and costs, allowing companies to balance social and environmental impacts.

- Explore model quantization techniques to reduce the computational resources required during inference.

- Implement model pruning techniques to reduce the size of the model, leading to lower compute requirements without compromising performance significantly.

Conclusion

Compliance with the EU AI Act goes beyond a mere legal duty; it is a strategic imperative for companies utilizing AI systems, setting a precedent for forthcoming regulations globally.

Thankfully, you are not alone! By harnessing Galileo's suite of capabilities, businesses can effectively navigate the complex landscape of regulatory standards.

Galileo Prompt facilitates the rapid development of high-performing prompts, ensuring companies can swiftly build transparent and safe AI systems.

Galileo Monitor helps with a proactive stance on security and performance concerns by detecting any related potential issues such as hallucinations.

Galileo Finetune aids in the construction of diverse and high-quality datasets for making robust models.

By leveraging these compliance-centric tools, companies can meet regulatory requirements and build trustworthy AI for their customers and users. Request a demo today to begin your journey to compliance!

Subscribe to Newsletter

Working with Natural Language Processing?

Read about Galileo’s NLP Studio