Introducing Galileo Protect: Real-Time Hallucination Firewall 🛡️

The Enterprise AI Adoption Journey

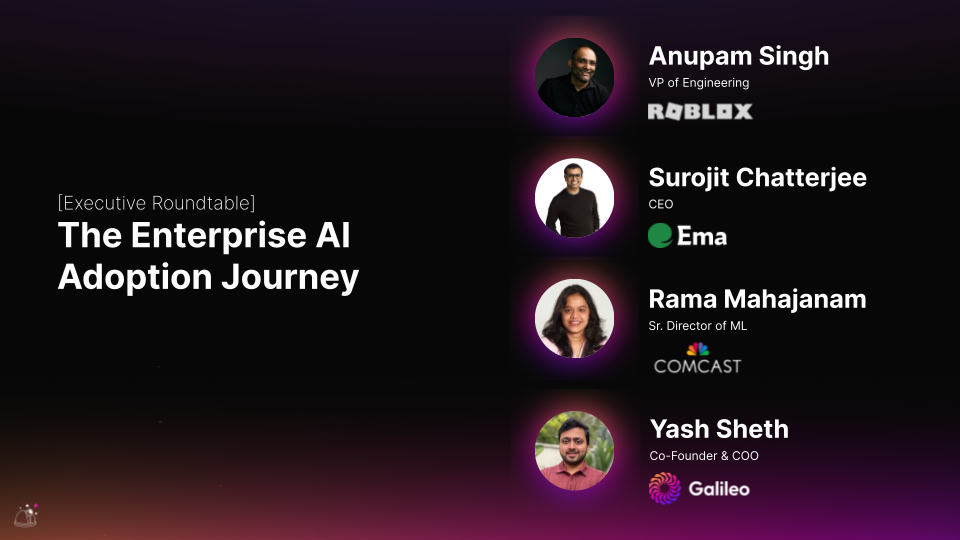

2024 has been a landmark year for generative AI, with enterprises going from experimental proofs of concept to production use cases. But how can businesses deliver tangible value while dealing with growing impatience? At GenAI Productionize 2024, our panel of enterprise executives walked us through their AI adoption journeys and shared lessons learned along the way. Read three brief takeaways from the session featuring Anupam Singh, VP of Engineering at Roblox, Surojit Chatterjee, Founder & CEO of Ema, and Rama Mahajanam, Sr. Director of ML at Comcast.

But honestly, do yourself a favor and just watch the whole session below.

Prove ROI With Simple Use Cases

AI excitement is at an all-time high. By starting small with a well-defined use case, AI teams can set realistic expectations at the board level before tackling more ambitious projects. Rama shared how Comcast’s RAG-based “Ask Me Anything” project did this by delivering tangible business value with reduced operational times and enhanced user experiences. Business units across enterprises often have expensive workflows that can be immediately streamlined with GenAI, resulting in measurable cost savings that make ROI proveable. Furthermore, employees can be reassigned to more impactful work, adding additional measurable value to the business.

Manage Risks From The Beginning

Recent high-profile mishaps, like Air Canada’s hallucination court case, demonstrate the importance of risk management for any enterprise GenAI use case. Even with RAG-based approaches, out-of-domain queries can cause embarrassing or potentially harmful responses. Furthermore, the opaque nature of LLM training data and decision-making make this a particularly challenging prospect. So what can businesses do?

Legal precautions, such as disclaimers and comprehensive documentation, can help manage user expectations and reduce liability. Going a step further, using “human in the loop” practices adds an additional layer of protection and can help deploy more experimental use cases. But teams need to be cautious when sending sensitive data through public APIs to private LLM providers. There’s often little guidance on the safety and confidentiality of this data, especially multimodal data which may be used to further train the LLM without the user’s consent. Enterprise AI teams must balance innovation with responsible risk management before deploying GenAI.

Structure Teams for Success

Each AI use case can have unique requirements that are best addressed with tailored team structures. Distinct teams for AI technology versus AI experiences can help create a focused development effort; mixing engineers, product teams, and AI experts from the beginning will accelerate development to deployment. Teams should prioritize rapid experimentation and flexibility as the project evolves. This emphasis on multi-disciplinary collaboration is especially critical for complex enterprise businesses to achieve successful GenAI deployment.

Watch the entire session on-demand now to help you get started on your enterprise AI journey!

Subscribe to Newsletter

Working with Natural Language Processing?

Read about Galileo’s NLP Studio